A Brave New World?

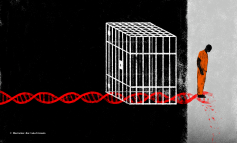

Hardly a day goes by without Artificial Intelligence aficionados trumpeting their game-changing achievements. Brain-like computers that can learn from experience, autonomous cars, hive-mind-controlled drone swarms etc. are for a considerable number of A.I. experts clear evidence that we are slowly but surely entering a brave new world of brain and computers interacting with each other as equals. The audacious goal of creating a supercomputer simulation of the human brain inspired among other things the European Commission to co-fund the £950m (€1,2bn) Human Brain Project (HBP) Flagship.[1]

A by-product of this debate is the realization that as soon as robots walk among us, they will need to follow ethical and legal rules.[2] Should a robot car choose the life of the driver over the one of a passer-by? What is the right punishment for robots?

We urgently need, so the experts, an anthropocentric set of rules preventing intelligent robots from harming humans. Understandably, the first candidate to issue this set of rules is Isaac Asimov and his Three Laws of Robotics,[3] which still serve as the blueprint for the behaviour of ‘ethical robots’:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

It is a pity though that this world is neither new nor brave –or intelligent. A.I., both the concept and the field, is all the rage in academic circles for quite a while,[4] alas the initial euphoria was very soon replaced by an “appreciation of the profound difficulty of the problem”.[5] To put it in simple words: A.I. does not work. It is “inhabited by the ghosts of old philosophical theories”,[6] such as the distinction between mind and body, which the field fails to recognise as such and advertises their results as an unbounded by theory, indubitable reality: a ‘GIVEN’. The open letter –signed by more than 800 neuroscientists– who attack both the Flagship’s science and organization, is simply a symptom of this recurrent failure.[7]

Laws for Robots?

This article will shed light on some questionable assumptions underpinning the need for ‘laws of robotics’: that computers can understand language; that a judge’s activity can be described in logico-mechanical terms; that judges apply a single rule or set of rules when they render a verdict etc. These are nothing but misunderstandings, misrepresentations and misinterpretations of basic concepts underpinning normative systems.

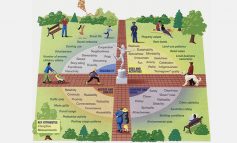

The idea that 3 (or even more) normative propositions could guide an agent’s behaviour is over-simplistic. E.g. Asimov’s first law is an instantiation of the archetype of a legal rule: ’You ought not to kill’. So if someone does kill another human, we are authorized to punish him, right? However the problem is that this individual rule would not be functional even during the time of Moses, let alone in our increasingly complex social reality. A set of rules which operate on any area of social life should have the ability to process more combinatorial possibilities in order to treat similar cases alike and different cases differently (e.g. killing in self-defence). We would need nothing less than an infinite number of rules regarding possible exceptions to primary rules, general principles and rules of liability, rules of adjudication etc. –to name only a few examples. For we deploy and validate large sectors of a legal order even for the seemingly simplest decision –the so-called ‘easy cases’. As Wittgenstein memorably remarked: “To understand a sentence means to understand a language.”[8]

Computers and Language

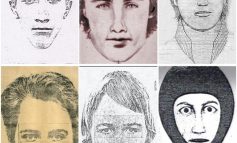

This insight leads us to the second and in my opinion most important question: Do computers understand (any) language? The answer is unequivocally ‘no’. The reason is simply that computer-programs are defined by their syntactical structure. Understanding human language is radically different a process as it involves semantics and grammar. The meaning of a word is its rule-governed use. And this involves two-way abilities. The ability to follow a rule –not simply: the ability to act according to a rule– is closely related with intentionality and the ability not to follow a rule. IT-researchers fail to understand that although a super-computer could ‘find’ in the cloud every word ever written, the individual case to be adjudicated is not already a member of any reference class. An algorithm is not able to identify (or not identify) the new case as an instance of a general rule which we could apply mechanically. Each new decision is a step into the void, as the fact-finder must establish a similarity relation in order to bring a case under the scope of a legal rule. This is an insurmountable problem for a computer operating on an algorithm, no matter how ‘sophisticated’ its programmers claim the latter to be.

We need algorithms to operate a computer. And we need semantics instead in order to communicate and deal with complex normative structures systematizing a vast area of legal norms.

IT-researchers and A.I.-visionaries fail to apprehend the historic lesson we learned at least since the Prussian Civil Code (1794) with its more than 20,000 paragraphs. In a constantly evolving world characterised by a radically unpredictable future, every codification attempting to be completely comprehensive would be only moments after its enactment in need of radical revision in order to catch the multitude of situations that (can) occur in real life. True, if the elements of a substantive legal rule (e.g. murder) are proved, then all things being equal the judge is authorized to apply the legal consequence (e.g. life imprisonment). But this is about structure, not semantics. In law we are constantly facing a problem of (unstable) semantics –not a structural one– due to the indeterminacy of language. Legal orders are closed systems, but only in an operational sense. Deduction of legal decisions from rules with predetermined meaning and the description of the judge’s activity in logico-mechanical terms fail both on the syntactic level –the complexity of any legal order poses for any computer an intractable problem– and on the semantic level: Law is open textured.

What is more, legal orders are much more than a “helter-skelter of uncoordinated individual norms”.[9] They require systematization through legal theories (i.e. legal dogmatics) which will secure the coherence and unity of the legal order. Last but not least, the term ‘harm’ is undefined. What constitutes harm? The allegedly operable ‘Laws of Robotics’ presuppose an extensive set of rules introducing values in a legal order. ‘Harm’ is not a thing but a normative ascription.

Wild claims

Despite our sinister reality and the existential threats humanity is facing, a conceptually confused, pompous and exaggerated field is siphoning funds from other legitimate areas of research. So why do we discuss about our dystopian future where robots become our destructive overlords instead of dealing first with the warlords of the present –those with or without a Nobel Peace Prize?

We can hazard a guess. Nietzsche has long ago diagnosed that behind most of the cognitive claims there is basically the will to power –and investors’ money! The wild claims from the field, e.g. to fully automatize legal operations, and to help companies save money, distracts from the associated message: ‘please buy our software’.

The irony of this episode is that proper research is not necessarily costly in terms of time and money. Learning from the past and other neighbouring disciplines can also help us save resources and last but not least: prestige! Setting out to establish ‘Machine Ethics’ is not like the glorious quest to conquer unchartered territories. It is an allusion to Winnie the Pooh’s aspiration to find the East Pole.

[1] For more information see http://ec.europa.eu/programmes/horizon2020/en/h2020-section/fet-flagships (14/12/17).

[2] See e.g. Benjamin Kuipers, Beyond Asimov: how to plan for ethical robots, in: The Conversation, 02/06/16 (http://theconversation.com/beyond-asimov-how-to-plan-for-ethical-robots-59725).

[3] Isaac Asimov, Isaac, Runaround. New York City: Doubleday (1942), p. 40.

[4] See e.g. John von Neumann, The Computer and the Brain (New Haven/London: Yale Univesity Press, 1958).

[5] S. K. Bansal, Artificial intelligence (New Delhi: APH Publ., 2013), p. 1.

[6] John R. Searle, Minds, Brains and Science (Harv. Univ. Press, 1986), p. 11.

[7] See http://neurofuture.eu/ (14/12/17).

[8] L. Wittgenstein, Philosophical Investigations, tr. by G. E. M. Anscombe, 1958, § 199.

[9] W. Ebenstein, The Pure Theory of Law: Demythologizing Legal Thought, in: 59 California Law Review (1971), p. 637.